The idea of seeing round corners without using a mirror may sound magical but, in the past ten years, non-light-of-sight (NLOS) imaging has become an increasingly active research field, with potential applications including surveillance, self-driving cars and fire and rescue. Now researchers in China have found a novel way to detect the arrival times of scattered photons with picosecond resolution, producing sharper images than possible with a standard camera without requiring expensive, specialist equipment.

The most common method for NLOS imaging measures the time-of-flight of scattered photons. Multiple laser pulses are emitted sequentially and focused at a surface within the line of sight. Some of these photons then scatter off the concealed object before reaching the detector. The detector records the time between emission and detection and uses it to infer the distance each photon has travelled. A computer algorithm then works out the shape of the concealed object from the path lengths of the scattered photons.

“The precision with which I can build a 3D image depends one-to-one on how good my temporal resolution is,” explains optical physicist Daniele Faccio of the University of Glasgow. “How precisely can I say how long it took for a photon to go round the corner, hit an object and come back again?”

Expensive and hard to use

In 2012, the first work on NLOS imaging achieved a temporal resolution of about 10 ps by using a streak camera similar to the type used in ultrafast optics, “You can buy streak cameras with 1 ps resolution,” says Faccio, “but they’re relatively hard to use and they cost between £100,000 and £200,000.”

Moreover, streak cameras do not offer single photon resolution, which is important as scattered signals can be very weak. Recent work, therefore, has focused on digital single photon detectors, which are much cheaper and more user-friendly. However, their time resolutions are limited to approximately 100 ps, which limits the image resolution to the centimetre scale.

Now, Jian-Wei Pan of the University of Science and Technology of China and colleagues have developed a technique that improves this time resolution dramatically. They send one “signal” pulse from an infrared laser to the visible wall, allowing the photons to scatter and return into a lithium niobate waveguide. A tiny fraction of a second later, they send a second “pump” laser pulse into the waveguide. If a scattered signal photon arrives at the same time as a pump photon, a visible photon is produced.

Non-linear optics

“When the pump and signal photons enter the waveguide, they undergo frequency upconversion through a nonlinear optics process,” explains team member Feihu Xu. “That produces [a photon at] another wavelength that can be detected by a single photon detector…The single photon detector only works in the visible wavelengths, so if the pump does not mix with the signal, there’s no detection.”

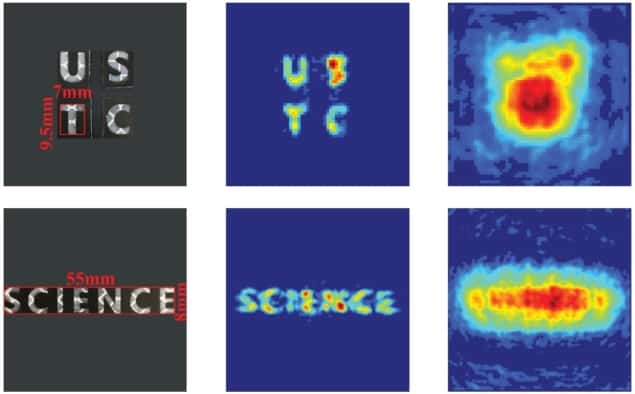

By varying the time spacing between the signal and pump pulses, the researchers can ascertain the times at which scattered photons arrive at each pixel in turn. They achieved a time resolution of about 1.4 ps, allowing them to obtain an order of magnitude improvement in image sharpness over anything achieved previously.

Long acquisition times

“It’s a very interesting accomplishment that, to the best of my knowledge, has improved upon the state-of-the-art in terms of spatial resolution in the non-line of sight volume over any previous technique based only on time-of-flight,” says electrical engineer Vivek Goyal of Boston University in the US. He notes, however, that “to make these measurements with very fine time resolution, you have to sweep the time between the signal and the pump. That multiplies your acquisition time.”

New location technique shatters the diffraction limit

Faccio agrees: “If I’m driving and I want to see another car approaching from behind a corner, I don’t need 200 micron resolution – all I need to know is whether there is a car…and I certainly can’t wait an hour for the results,” he says. “What people are focusing on now is ‘how can I do this faster, with less laser light, and how can I make the volume that I can image bigger’. This is very neat, it’s very high precision, but it’s not obvious to me what the killer application is.”

Xu acknowledges that “right now a bottleneck is the imaging speed. It takes us maybe minutes, but there’s a lot of room to improve. For stationary monitoring by the police or the military, even slow imaging could have some applications, but for general applications we definitely need to solve the imaging speed problem.”

The research is described in Physical Review Letters.