Deep learning could hold the key to making sense of proton collisions generated in the world’s premier particle accelerator. That is the message from physicists in Europe and the US who have shown how an algorithm developed for language translation can efficiently filter out noise from data taken by detectors at CERN’s Large Hadron Collider. The algorithm could give physicists the best chance of discovering exotic new particles once the LHC has been upgraded.

The LHC slams protons together at incredibly high energies in order to generate a range of massive particles. This could include hypothetical particles not described by the Standard Model of particle physics – the discovery of which is a primary goal of the collider.

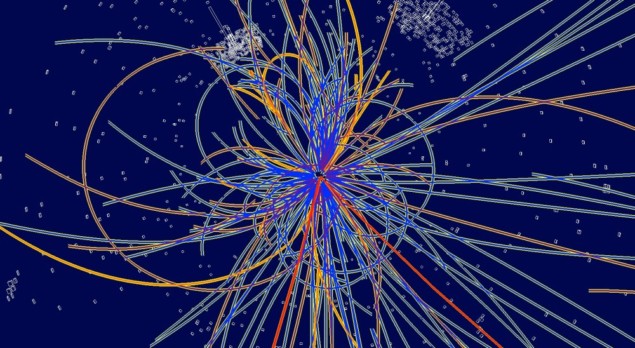

The LHC actually collides bunches containing billions of protons in order to ensure a reasonable chance that at least one proton from one bunch will interact with a proton in the other bunch. One major challenge in interpreting collider data is distinguishing the particles produced by (sought-after) head-on collisions from those resulting from glancing blows. The latter, known as pile-up, mainly consist of pions that end up dotted around the detector and make it harder to establish the presence of any new particles.

Pile-up is set to become a particular problem in the next few years as the LHC’s collision rate is ramped up. From 2027, the High-Luminosity LHC set to generate around 200 pile-up events per bunch collision, about an order of magnitude more than it was turning out five years ago.

Tracing back

Physicists have devised several ways to focus on interesting collisions. One simple approach is to consider the tracks left by charged particles as they travel through a detector, and only keep events with tracks that trace back to head-on collisions – originating from what is known as the primary vertex.

A more sophisticated alternative known as PUPPI does this as well as sifting through neutral particles produced in the collider. It does so by establishing the provenance of the charged particles around each neutral particle and then calculating the probability that the latter originated at the primary vertex given its relationship with the former.

In the latest work, Benedikt Maier of CERN, Siddharth Narayanan at Flagship Pioneering and colleagues set out to achieve the same end using machine learning. Whereas PUPPI relies on step-by-step calculations to directly establish whether certain particles originate from the primary vertex, the algorithm in this case – an advanced type of neural network that the researchers dub PUMA – learns the relationship between particle properties and collision origin after being trained with a dataset comprising multiple input-output pairs.

This is not the first artificial neural network devised to try and deal with the problem of pile-up at the LHC. In 2017, for example, Matthew Schwartz of Harvard University in the US and colleagues reported having designed a so-called convolutional neural network to clean-up the output from the ATLAS and CMS detectors expressed in the form of images – the intensity of each pixel representing particles’ energy distribution. By teaching the network to associate images of all neutral particles with the corresponding ones showing just neutral particles from the primary vertex, they found that the algorithm could then generate cleaned-up images when fed fresh noisy data at its input.

Transformer algorithm

According to Maier, however, this and other machine-learning-based methods rely on results from PUPPI as part of their input. PUMA, in contrast, removes pile-up simply based on raw detector data. It does so using an algorithm known as a transformer, which was designed to convert a phrase in one language to the equivalent phrase in another language. Re-purposed for particle physics, it instead transforms data representing a series of particles from a collision event into a sequence of numbers between 0 and 1 – the probabilities that each respective particle comes from the primary vertex.

Whereas other machine translators tend to focus only on a word’s nearest neighbours when working out the meaning of a string of words, transformers also account for links between words spaced further apart. They do so by analysing a process known as attention, which involves representing a word as a vector of features, multiplying that vector by certain matrices and then combining the outcome of those calculations with the equivalent from another particle via the dot-product function.

PUMA, which stands for Pile-Up Mitigation using Attention, does likewise by encoding each particle as a vector comprising parameters such as particle type, energy and angle. It then uses the attention process to generate a new set of vectors that reflect each particle’s relationship with the other particles, and feeds these vectors into a simple neural network that distills the information into one numerical value per particle – the origin probabilities. By training the network using input vectors tied to known binary probabilities, the difference between the calculated and expected outputs can be used to iteratively tweak the attention matrices so that in future the algorithm can recognize whether or not fresh raw data correspond to particles from the primary vertex or from pile-up.

Detector snapshots

The researchers trained their network with 200,000 “detector snapshots”, which they generated using a simulation of the CMS produced by the DELPHES computer program. Each snapshot comprises the remnants of one main proton collision and around 140 glancing blows. This amounts to about 5000 particles per snapshot and therefore one billion input vectors and associated probabilities overall. They then used further simulation data to compare the performance of PUMA with classical algorithms such as PUPPI. In particular, they focussed on transverse momentum – which is zero when the colliding protons fly towards one another and should remain so after the collision once all extraneous particles have been removed from the data.

AI and particle physics: a powerful partnership

The researchers found that calculations of net transverse momentum based on PUMA pile-up removal got closer to the best-case scenario – simulated samples without pile-up – than did those based on removal by the other pile-up algorithms. They now plan to test PUMA using real data from one specific sub-detector to be installed in CMS. However, Maier points out that, for all its improvement over rival schemes, the new algorithm remains significantly at odds with the best-case scenario. “It is for future research to see what is missing still in the model,” he says.

Matteo Cacciari of Université Paris Cité in France, who was not involved in the latest research, welcomes the “excellent results”, pointing out that by design machine learning makes use of a much wider range of information than conventional techniques. But he adds that it is also harder to understand exactly where this and other neural networks get their “discriminating power” from, making it difficult, he argues, to spot any unwanted biases in the algorithm. “In science it’s always better to understand something as extensively as possible,” he says.

The research is reported in Machine Learning: Science and Technology.