Photoacoustic imaging is a hybrid technique used to acquire molecular, anatomic and functional information from images ranging in size from microns to millimetres, at depths from hundreds of microns to several centimetres. A super-resolution photoacoustic imaging approach – in which multiple image frames of the target are superimposed to achieve extremely high spatial resolution – can localize very small targets, such as red blood cells or droplets of injected dye. This “localization imaging” method significantly improves the spatial resolution in clinical studies, but is achieved at the expense of temporal resolution.

A multinational research team has used deep-learning technology to dramatically increase image acquisition speed without sacrificing image quality, for both photoacoustic microscopy (PAM) and photoacoustic computed tomography (PACT). The artificial intelligence (AI)-based method, described in Light: Science and Applications, provides a 12-fold increase in imaging speed and a more than 10-fold reduction in the number of images required. This advance could enable use of localization photoacoustic imaging techniques in preclinical or clinical applications that require both high speed and fine spatial resolution, such as studies of instantaneous drug response.

Photoacoustic imaging uses optical excitation and ultrasonic detection to enable multiscale in vivo imaging. The technique works by shining short laser pulses onto biomolecules, which absorb the excitation light pulses, undergo transient thermo-elastic expansion, and transform their energy into ultrasonic waves. These photoacoustic waves are then detected by an ultrasound transducer and used to produce either PAM or PACT images.

Researchers from Pohang University of Science and Technology (POSTECH) and California Institute of Technology have developed a computational strategy based on deep neural networks (DNNs) that can reconstruct high-density super-resolution images from far fewer raw image frames. The deep-learning based framework employs two distinct DNN models: a 3D model for volumetric label-free localization optical-resolution PAM (OR-PAM); and a 2D model for planar labelled localization PACT.

Principal investigator Chulhong Kim, director of POSTECH’s Medical Device Innovation Center, and colleagues explain that the network for localization OR-PAM contains 3D convolutional layers to maintain the 3D structural information of the volumetric images, while the network for localization PACT has 2D convolutional layers. The DNNs learn voxel-to-voxel or pixel-to-pixel transformations from either a sparse or a dense localization-based photoacoustic image. The researchers trained both networks simultaneously and, as training progresses, the networks learn the distribution of real images and synthesize new images that are more similar to real ones.

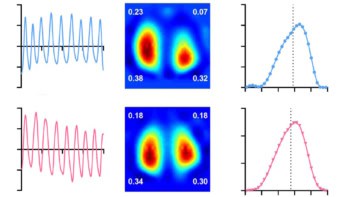

To test their approach, the researchers used OR-PAM to image a region-of-interest in a mouse ear. Using 60 randomly selected frames, they reconstructed a dense localization OR-PAM image, used as the target for training and the ground truth for evaluation. They also reconstructed sparse localization OR-PAM images using fewer frames, for input into the DNNs. The imaging time for the dense image was 30 s, whereas for a sparse image using five frames, it was just 2.5 s.

The dense and DNN-generated images had higher signal-to-noise ratio and visualized vessel connectivity better than the sparse image. Notably, a blood vessel that was invisible in the sparse image was revealed with high contrast in the DNN localization-based image.

The researchers also used PACT to image the mouse brain in vivo following injection of dye droplets. They reconstructed a dense localization PACT image using 240,000 dye droplets, plus a sparse image using 20,000 droplets. The imaging time was reduced from 30 min for the dense image to 2.5 min for the sparse image. The vascular morphology was difficult to recognize in the sparse image, whereas the DNN and dense images clearly visualized the microvasculature.

Neural network generates lung ventilation images from CT scans

A particular advantage of applying the DNN framework to photoacoustic imaging is that it is scalable, from microscopy to computed tomography, and thus could be used for various preclinical and clinical applications on different scales. One practical application could be diagnosis of skin conditions and diseases that require accurate structural information. And as the framework can significantly reduce the imaging time, it could make monitoring of brain haemodynamics and neuronal activity feasible.

“The improved temporal resolution makes high-quality monitoring possible by sampling at a higher rate, allowing analysis of fast changes that cannot be observed with conventional low temporal resolution,” the authors conclude.

![]() AI in Medical Physics Week is supported by Sun Nuclear, a manufacturer of patient safety solutions for radiation therapy and diagnostic imaging centres. Visit www.sunnuclear.com to find out more.

AI in Medical Physics Week is supported by Sun Nuclear, a manufacturer of patient safety solutions for radiation therapy and diagnostic imaging centres. Visit www.sunnuclear.com to find out more.