Humans like to build robust systems – ones that resist change and minimize unreliable or undesirable results. In quantum computing, this desire manifests itself as fault-tolerant quantum computing (FTQC), which aims to protect both the quantum state and the logic gates that manipulate it against interventions from the environment. Although quite a resource-intensive task, physicists at IBM Research recently showed that they could partially meet this requirement, thanks to some real-life quantum “magic”.

The process of cushioning quantum states against uncontrollable interventions is called quantum error correction (QEC). In this process, information that would ideally be stored in a few quantum bits, or qubits, is instead stored in many more. The resulting redundancy is used to detect and correct the enforced errors. In QEC jargon, the mapping of the ideal-case quantum state (the logical state) into the noise-protected state (the physical state) is termed encoding. There are multiple ways to perform this encoding, and each such scheme is called an error-correction code.

FTQC begins with QEC as a first step and then uses appropriate encoded gates and measurements to robustly perform a computation. For it to succeed, the error rate must fall below a certain threshold. Achieving this low error rate remains a significant challenge due to the huge number of physical qubits required in the aforementioned encodings. However, researchers are making significant improvements to FTQC sub-routines.

A little bit of magic

One such improvement recently came from an IBM team led by Maika Takita and Benjamin J Brown. In this work, which is published in Nature, the team proposed and implemented an error-suppressing encoding circuit that prepares high-fidelity “magic states”. To understand what a magic state is, one must first recognize that some operations are much easier to implement in FTQC than others. These operations are known as the stabiliser or Clifford operations. But with these operations alone, we cannot perform useful computations. Does this render Clifford circuits useless? No! When a class of states called magic states is injected into these circuits, the circuits can do any computation that quantum theory permits. In other words, injecting magic states introduces universality into Clifford circuits.

The next important question becomes, how do you prepare a magic state? Until a few decades ago, the best techniques for doing so only created low-quality copies of these states. Then, two researchers, Sergey Bravyi and Alexei Kitaev, proposed a method called magic state distillation (MSD). If one starts with many qubits in noisy copies of magic states, MSD can be used to create a single, purified magic state. Crucially, this is possible using only Clifford operations and measurements, which are “easy” in FTQC. Bravyi and Kitaev’s insight created a leading FTQC model of computation called quantum computation via MSD.

Of course, one can always start with a circuit that allows for operations outside the Clifford set. Then no input state remains “magical”. But the big advantage of sticking to Clifford circuits supplemented by magic states is that its universality reduces the general fault-tolerance problem to that of performing fault-tolerant Clifford circuits only. Moreover, most known error correcting codes are defined in terms of Clifford operations, making this model a crucial one for near-future exploration.

High fidelity of states

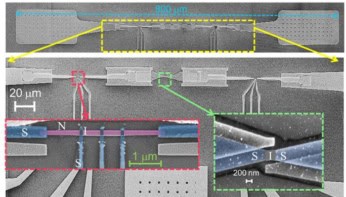

While MSD requires a large number of attempts to prepare the intended state, the IBM researchers showed that these can be reduced by preparing input magic states (to MSD) with high fidelity – meaning that the states prepared are very close to those required. In a proof-of-concept demonstration, the IBM team prepared an encoded magic state that is robust against any single-qubit error. Using four qubits of the 27-qubit IBM Falcon processor on the ibm_peekskill system to encode the controlled-Z (CZ) magic state, they achieved fidelities equivalent to the square of the fidelity they would have achieved without encoding the initial state.

The team also improved the encoded state’s yield – that is, the number of magic states produced over time – by introducing adaptivity, which is where mid-circuit measurement outputs are fed forward to choose the next operations needed to reach the required magic state. “Basically, we can create more magic states and less junk,” says team leader Benjamin Brown, who holds appointments at IBM Quantum’s T J Watson Research Center in New York, US and at IBM Denmark. Both these observed advantages, in yield and fidelity, are due to quantum error correction that suppresses the noise accumulated during state preparation.

Verifying the states prepared

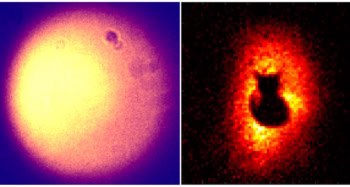

The team verified that they had created the intended magic state (and hence the claimed gain in the fidelity) via tomography experiments. In one experiment, the fault-tolerant circuits measure the physical state in such a way that only the information about the logical state is revealed. This is termed logical tomography. Meanwhile, the other experiment, called physical tomography, determines the exact physical state. As might be expected, logical tomography is more efficient and requires fewer resources than physical tomography. For example, in the case of the CZ state, the former required 7 while the latter required 81 measurement circuits.

Why error correction is quantum computing’s defining challenge

As well as demonstrating the advantage of quantum error correction, the new work also opens up a new research pathway – invoking adaptivity to prepare high-fidelity, high-yield magic states with further important implications in developing creative fault-tolerant computing techniques. “This experiment sets us on the path to solving one of the most important challenges in quantum computing – running high-fidelity logical gates on error-corrected qubits,” Brown says.